Does first class seating cause air rage?

Posted: May 14, 2016 Filed under: Uncategorized 1 CommentYou may have heard about the recent paper “Physical and situational inequality on airplanes

predicts air rage”. It claims that the existence of first-class seating on a plane causes air rage, especially if economy-class passengers have to walk past it on the way to their seats.

The paper received quite a lot of uncritical publicity from the likes of Science, who really ought to know better. I was looking forward to some thorough debunking, and clicked through to articles by Andrew Gelman and Runway Girl Network with great anticipation. But they were a bit of a let-down.

Both articles correctly pointed out that the paper merely showed correlation, not causation, and that all the speculation about inequality was just that – speculation. The RGN article also provided some useful background on the aviation industry, deducing that the airline in question was probably a US network carrier, whose only all-economy planes are short-haul regional jets (which turns out to be important!) They also made Science look pretty silly by pointing out that the air rage example they provided was on an all-economy Air Asia flight.

But otherwise the complaints were fairly petty. Andrew Gelman mostly criticized the over-precision of the quoted coefficients in the paper, while RGN was disturbingly ad-hominem and got a bit hysterical about the paper’s failure to account for code-share flights. Sure, the paper should have acknowledged the existence of code-share flights in their data, but RGN couldn’t show how they would bias the results.

Now, my employer mostly flies me business class, so I’ll be damned if I’ll let this threat to my privilege go unchallenged. So here are the problems with the paper’s methodology.

The authors of the paper had access to flight data containing variables such as number of seats, flight distance, seat pitch, first-class configuration, delays, etc, as well as the number of air rage incidents that occurred.

They plugged all this data into a linear regression model, which generated coefficients for each of the variables in a way that best matched the number of air rage incidents. Coefficients that are large and positive are assumed to be statistically significant, and that was the case with the variable relating to first-class.

Now, linear regression is a wonderful tool – I’ve used it myself many times – but if you read the fine print you’ll find that it’s only valid if the variables involved are likely to be linear. And I suspect that the most important variable for predicting air rage – namely, flight duration – is non-linear.

Another caveat with linear regression is that you need to pick variables that are independent. If two variables are highly correlated, the allocation of coefficients between the two of them can be somewhat arbitrary, which is a problem if one of the variables is highly predictive.

I can’t know for certain without seeing the (confidential) raw data, but I’d bet money that the relationship between flight distance and air rage incidents is upward-curving, maybe parabolic. In other words, people get angrier the longer they’ve been flying.

When you use linear regression to predict a parabolic variable you get a line-of-best-fit that under-estimates incidents on long flights. Linear regression tries to correct for this by generating spurious coefficients on variables that correlate with long flights.

And that’s probably what happened here. Because the airline in question only ran all-economy configurations on short-haul, the first-class variable becomes a proxy for long flights, and was assigned a large coefficient to correct for the non-linearity of air rage incidents vs flight distance. Thus it was assumed to be a significant predictor of air rage.

It may not be a significant factor in this study, but it’s also problematic that the first-class variable probably correlates strongly with flight distance in the data set they used. That makes it difficult to disentangle the two effects.

There are two other problems with the paper’s methodology. They may not bias the result, but really should be addressed.

- Instead of using flight distance as a variable, they should have used flight duration. It’s time in the air that affects air rage, not distance traveled, and flight duration information is readily available.

- The variable being predicted was incidents per flight. Now, the authors tried to correct for that by providing number of seats as a variable, but that’s unreliable and could lead to a bias against larger aircraft. A better technique would be to divide the number of incidents by the number of seats, and predict the incidents per seat. Even better would be incidents per passenger, if passenger numbers are available, since that would correct for varying load factors on different routes.

There are a few ways the authors could improve their analysis. For a start, they should use linear regression to predict incidents per passenger-hour instead of incidents per flight, while keeping flight duration as a variable. This will correct for the non-linearity of incidents per hour if it’s merely quadratic.

However, if it’s true that all the economy flights are on small short-range aircraft, then you still need to extrapolate a long way out of a tight cluster to compare them with the big long-range aircraft. That’s statistically dubious, and the only way forward may be to discard all flights longer than, say, 90 minutes.

Best of all would be to include a bunch of long-haul all-economy flights – such as those flown by Air Asia and Ryanair – into the analysis and see what coefficients are generated. Given the feral nature of those flights, I suspect the first-class effect would vanish, or even become negative.

Fill the universe with life

Posted: June 14, 2015 Filed under: Uncategorized Leave a commentI was thinking about the economics of building human-level artificial intelligence. Some people refer to this as the singularity, but I dislike the term because it implies a vertical asymptote, which is misleading.

Anyway, there are two immediate consequences …

- It will soon lead to artificial super-intelligence. Once we figure out a design for intelligence we should be able to scale it arbitrarily, by adding more CPU and memory – barring legislative responses such as the Turing Police.

- It will become cheaply available to the masses. Unless the hardware relies on a rare element, the only limiting factor to mass deployment will be energy: to manufacture them and to run them. However, there are plenty of untapped sources of clean energy – geothermal, thorium fission, tidal – that aren’t used because they’re not cost-effective. But they’re usually expensive only because of high labour costs in the supply chain, and these will disappear with the availability of AI.

So, once everyone has unlimited super-intelligent labour at their command, what are they going to do with it? Travel to the stars? Build mind-blowing works of art? Of course. But what could you do that would push the limits of a super-intelligence? I’d try filling the universe with life.

Three rules …

- Not DNA-based. Simply adapting DNA-based life is too easy, and in any case it will only work in environments with liquid water.

- Must not compete with existing life. The aim is to increase the amount of life in the universe – and make better use of the available energy – not to engage in zero-sum competition with life in existing niches.

- Proper self-replicating life. In other words life forms that consume energy and raw materials from their surroundings and use them to grow and reproduce. Preferably reproducing with mutations and genetic mixing so they can evolve. Factory-made robots don’t count.

In theory life can exist wherever there is an energy source and suitable raw materials. So the gas clouds of Jupiter and the upper atmosphere of Venus are obvious candidates, as are places like Titan and Europa.

But let’s get ambitious and non-organic. After all, we’ll have super-intelligent AIs working for us. How about creatures that live in the sun’s corona? Giant gaseous life-forms that live in nebulae. Something that survives and reproduces in the molten core of a planet. Or a purely space-based ecosystem of creatures that eat orbiting dust and rocks, and navigate using the solar wind and magnetic fields.

Difficult challenges, sure, and well beyond our current capabilities. But the pay-off would be enormous. Biodiversity is an intrinsically beneficial outcome, providing resilience in the face of calamities, and greater efficiency in the utilization of resources. If things go horribly wrong, it increases the likelihood that life will survive and recover.

Of course, there are some mutually-exclusive motives that can drive the design of new life …

- Curiosity. Build the minimum viable life form for an environment and see what it evolves into. Some of the adaptations may be useful to us, in the way that organisms living near deep-sea vents secrete enzymes that are now used in industrial processes.

- Design for intelligence. Analogous to biodiversity, cultural diversity leads to greater Ricardian gain from trade. The more different a culture is, the more likely they are to have something unique to trade with us, to our mutual benefit. And trading with an intelligent species that dwells in the Earth’s mantle would be pretty awesome.

- Design to host human consciousness. This raises many issues, but imagine if these life forms could host human minds. Maybe not running at the same speed, and probably with access to different senses, but with a human personality. “Humans” could then colonize the universe and survive for billions of years.

If you’re expecting me to conclude with “how to get there from here” speculation, it’s not going to happen. We are a long way from human-level intelligence, and I haven’t seen any approaches that show much promise. So I guess this article counts as science fiction.

Fixing online dating

Posted: January 12, 2015 Filed under: Uncategorized Leave a commentOnline dating: a huge opportunity if you can make it work

Periodically I survey the internet landscape looking for business opportunities. In particular I look for online services that (a) don’t work very well and (b) can be fixed with better technology.

I haven’t found many. All the obvious online services work about as well as they possibly can. When it comes to search, retail, share trading, maps, video, music, and messaging it’s hard to see how they could be improved. Admittedly I missed the picture sharing niche – now filled by Instagram and Snapchat – but that’s probably because I’m not a teenager!

Online banking is an area where there’s a lot of room for improvement, but the constraint there isn’t technological – it’s mostly regulatory – and the barriers to entry are huge. Maybe Facebook or Google could launch a global banking service that disrupts the industry, but a start-up couldn’t.

No, the only niche I’ve found that fits my criteria is online dating. People spend enormous amounts of time on dating sites, filtering through a lot of noise, and occasionally going on dates with people who, by all accounts, may as well have been selected at random. Many people eventually give up in frustration.

So, what would an ideal dating site look like? Well, it would collect your details. It would analyze them using complex algorithms and match them against everyone else’s details. Then it would return a list of people who are the best you are going to get, and who are guaranteed to find you attractive. Simple! Although I’m not sure about the revenue model …

Why online dating doesn’t work

We’re a long way from achieving this ideal service – assuming such a thing is even possible – so let’s first figure out why the existing services don’t work.

To begin with, let’s assume that everyone can be assigned an attractiveness score from 1 to 10. If all men and all women stood in lines sorted by “attractiveness”, the top 10% would be assigned a 10, the next 10% a 9, and so on. All the tens would go out with tens, the fives with fives, and so on. There are some wrinkles when it comes to differences in age, education, and religion, but basically that’s how the real world works.

Ranking women by attractiveness is fairly straightforward because it’s all about looks. Anyone can do it, just using photos, and will come up with the same rankings, regardless of whether they’re male or female, gay or straight, and irrespective of culture. The ability seems to be hard-wired into the human brain, although interestingly it’s not something computers can do yet.

Where things get difficult is ranking men. Looks (especially height and muscle) count for a bit, but women also want to know whether they’re successful, confident, wealthy, considerate, funny, yada yada yada. You can’t tell those things from a photo, and probably not from a written profile either.

So how do you figure out a guy’s ranking? Well, I can think of three ways …

- Date him. This is time-consuming. If you believe the Pick Up Artist community it takes a woman 7 hours worth of dates to decide if a man is worth sleeping with. However, it is the gold standard, and a successful matchmaking algorithm may have to somehow replicate it.

- Self-selection. One person who knows a guy’s ranking is the guy himself, at least at an instinctive level. Men will usually only approach women of the same level of attractiveness. They won’t go much higher – rejection hurts – and they won’t go much lower – hey, they can do better. I suspect women know this intuitively, and are more likely to consider a guy who has the confidence to approach them.

- Look at the exes. There may be the odd aberration, but if a guy’s exes are mostly threes and fours, he’s probably a three or four. So be careful who you have your arm around in Facebook photos!

So why is this relevant? Well, consider how dating sites work. Men contact the women, guided by their looks, just like in the real world. But there’s no face-to-face rejection. There’s no downside to contacting someone out of your league, and a huge upside. So all the guys do it.

As a result, the attractive women get flooded with requests. But they have no way of sorting the good from the bad because there’s no way to evaluate a guy’s attractiveness. They may resort to some arbitrary selection criteria, but since personality is so important, the criteria are probably no better than a coin toss. So they end up going on dates with guys who are, on average, worse than the self-selected ones they meet face-to-face. Eventually they give up on the online world and go back to the bars, book clubs, or wherever it is that attractive women hang out.

Things aren’t great for the attractive men either. They have no way to stand out from the crowd and line up a date with the attractive women they usually hook up with, so they also give up and go back to the bars, etc. And with the attractive people all leaving, the site goes into a bit of death spiral, propped up only by an infusion of naive fresh meat.

Possible solutions

Let’s look at a few possible ways to improve things.

- A first-principles matchmaking algorithm. I have no idea how this would work. Not only would it have to model a guy’s personality, it would have to model it when faced with a particular woman. This probably requires a full brain upload and lots of simulations, which is way beyond current technology.

- Add rejection to online dating. It’s an effective feedback mechanism in the real world, so why not in the online world as well? Well, in the real world, a woman usually has enough information to quickly identify guys who are clearly not in her league and send them on their way. But in the online world she doesn’t, and the rejection signal wouldn’t be much better than random. Rats who receive random electric shocks tend to end up depressed and unhappy, so this is almost certainly a bad idea.

- Include pictures of guys’ exes. In theory I think this would be a brilliant solution, especially if computers eventually get as good at ranking female beauty as people. But in practice there are serious privacy concerns – can you post pictures of exes without their consent? – and a strong incentive to game the system with selective pictures. But honestly, Facebook kind of works this way already, which is why people these days are more likely to exchange Facebook details than a phone number with someone they meet at a bar.

So I have to concede defeat here. I can’t figure out a service that works better than guys approaching women and saying hi. Maybe I should open a bar.

The Matt Hat Kickstarter campaign

Posted: April 3, 2014 Filed under: Uncategorized Leave a commentI’ve finalized the design of the augmented reality baseball cap – now called the Matt Hat – and it’s available through a Kickstarter campaign. Check it out!

Heritage Health Prize results

Posted: October 19, 2013 Filed under: Uncategorized Leave a commentA few days ago I attended a talk by the winner of the Heritage Health Prize, and it reminded me that I should document the outcome of my team’s efforts.

My team-mate and I competed under the name Planet Melbourne. We submitted entries up until the first milestone at six months and didn’t make any submissions after that.

This apparently made us a useful reference point during the following milestones. Because the milestone rankings didn’t show scores, movements in a team’s ranking relative to our position gave a rough-and-ready indication of the improvement in their score.

Anyway, our rankings were as follows …

Milestone 1 (6 months): 5th

Milestone 2 (12 months): 5th

Milestone 3 (18 months): 14th

Finish (24 months): 25th

After the initial announcement of the winners, there were a whole bunch of disqualifications. Probably from people competing using multiple accounts. As a result, our final official ranking improved to 17th.

Visor, version 2

Posted: August 16, 2013 Filed under: Uncategorized 1 CommentI actually got the new head-up display manufactured a few months ago, but haven’t had time to blog about it until now.

Here it is, modelled by my co-worker Scott. Notice the HTC Tatoo held in place with a rubber band. The information appearing on its screen is reflected back off the visor to fill your field of view at a virtual distance of about a metre.

At first glance it looks pretty good, but there are two problems. First, it’s too reflective. I requested aluminium coating on the inside surface at a thickness that would let through 20% of external light, similar to a pair of sunglasses. Unfortunately they coated both sides, so it’s only letting through about 4%. You can sort of see my couch in the background, but it’s faint, so the effect is more virtual reality than augmented reality. Still, that’s easy to fix in the next version.

More serious is the image distortion. I designed the visor using the optics I learned in high school, namely how a parabola can magnify an object and make it appear further away. Well, they lied to me. It turns out parabolic reflectors only work when viewed along the axis, and when they’re viewed off-axis, e.g. from your left and right eyes, the image gets distorted, especially at the edges.

You can see in the picture above how the “22:31” text is sloping down, and that’s viewed from a camera that was fairly close to the axis. Viewed from your eyes the slope is worse, and, more important, the distortions are different for each eye so the images don’t line up. That makes it impossible to read text.

There’s no good solution to this. I’m writing some ray-tracing software to generate a curved surface that will show a separate image to each eye, but it has drawbacks. Each eye won’t see the entire image, and I suspect it’s going to be fairly sensitive to the position of the eyes relative to the visor.

At least this time I’ve learned a new trick for evaluating a design cheaply. I save the design in STL format, load it into Blender, make it a mirrored surface, and render it with ray tracing. If the reflected checker pattern is undistorted from the two eye positions then I’ve got something that works.

Head-up display update

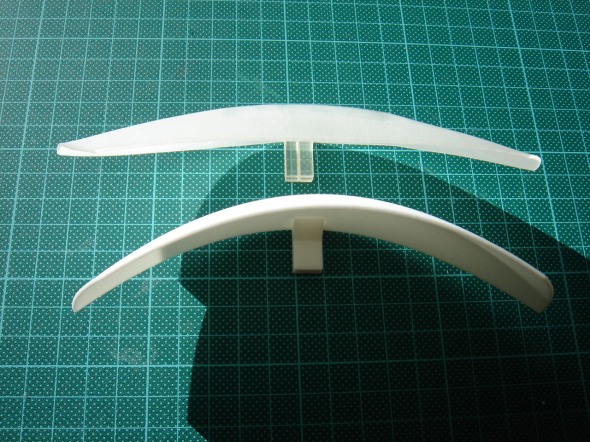

Posted: May 19, 2013 Filed under: Uncategorized Leave a commentI’ve been having trouble 3D printing the transparent visor needed for my head-up display. The part sent to me by Shapeways was seriously warped and not at all transparent. So I complained about the warping, and they gave me a credit which I spent on printing the visor in white polished plastic.

The white version let me test the form factor (which seems OK), and I’ll glue some reflective film to the inside to check the optics.

If it passes that test I’ll get it manufactured properly. I’ve found a local prototyping firm who can make the visor using CNC and mirror-coat it using vapour deposition. Not cheap – we’re talking several hundred dollars – but it’ll be done properly.

Quadcopters

Posted: March 9, 2013 Filed under: Uncategorized 2 CommentsSeeing this video made me realize that quadcopters are more advanced than I thought, which got me thinking about potential applications. One that came to mind was human-computer interactions.

To interact with someone as an equal we expect them to be at eye level (which is a huge problem for people in wheelchairs), and I assume the same will apply if we ever deal with intelligent machines.

In science fiction the usual solution is to put the intelligence in human-sized robots, such as C-3PO and Robbie the Robot. Smaller robots, such as R2-D2 and Wall-E, are generally portrayed as being child-like and inferior.

However, in his Culture novels, science fiction author Iain M Banks has another approach – small robots that float at eye level. These robots, called drones, range in size from hockey pucks up to rubbish bins, and are usually far more intelligent than the humans they deal with. And I suspect we could build a half-decent drone using existing quadcopter technology.

The intelligence behind the ‘copter would be housed remotely, and the only extra features you’d need on-board would be wireless comms, a decent speaker, a camera, a glowing component to show “emotion”, and centimetre-accuracy navigation. Apart from the centimetre accuracy, that’s mostly stuff you’d find in a cheap smartphone. I’d also like it to carry out “nodding” and “head shaking” manoeuvres, but I assume that’s already possible with quadcopters.

I’d be really interested to see how people interact with a talking quadcopter. Would they actually engage as though it were alive, or would they treat it as just another computer, like a flying automatic teller machine? If they do engage, I could imagine quadcopter drones being used as tour guides and customer service reps.

On an unrelated note, does anyone know why the quadcopter is the dominant design? Surely a tri-copter would be just as stable, and cheaper to manufacture?

Update: I don’t why I ask speculative questions when I can just look up Wikipedia. According to this page four rotors make sense because two of them can be counter-rotating, providing more stability. And they give you three axes of rotational motion, so “nodding” and “head shaking” are definitely possible.

Sandpaper

Posted: March 2, 2013 Filed under: Uncategorized Leave a commentIt’s always amazing what obscure products you can buy on eBay. It this case, a 10-pack of assorted sandpaper.

Time to start sanding the visor.

Second part arrives

Posted: February 19, 2013 Filed under: Uncategorized Leave a commentThe transparent visor part arrived yesterday from Shapeways.

It’s not very transparent, but apparently with sanding and polishing it becomes clear. It’s also very fragile – a piece broke off when I tried to attach it to the first part and it had to be glued back on.

Now that I’ve got my hands on the part I can see a few things that need improving.

- The visor needs to be thicker. It’s currently 1.2mm thick, and will be difficult to sand and polish without breaking. At least 1.5mm next time.

- It needs a shorter focal length. The visor sits too far forward on the brim of the cap and doesn’t really provide fully field-of-view coverage. Shortening the focal length will move it back and increase its curvature, hopefully solving both problems.

- The attachment mechanism needs rethinking. The tolerances are too tight – this can be fixed with sanding, but that’s not suitable for mass production. It can fail when the visor material is too fragile. It’s too complex for injection moulding. And it partly obscures the phone’s screen.

Still, I’ll proceed with what I’ve got. Get the visor sanded and polished, then hopefully mirror coated, and see if it works.